Computer models suggest there is no life in Europa’s underground ocean

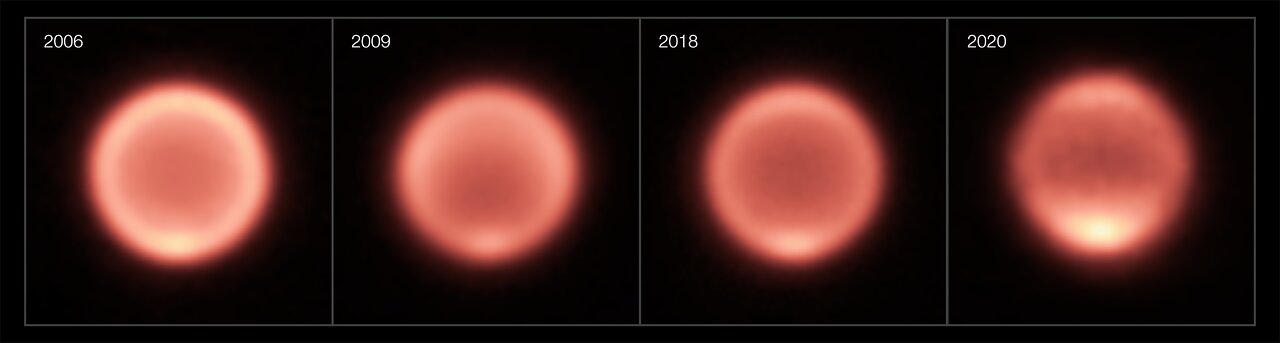

The uncertainty of science: Several different computer simulations now suggest that the underground ocean inside the Jupiter moon Europa is inert and likely harbors no existing lifeforms.

He and his colleagues constructed computer simulations of Europa’s seafloor, accounting for its gravity, the weight of the overlying ocean and the pressure of water within the seafloor itself. From the simulations, the team computed the strength of the rocks about 1 kilometer below the seafloor, or the stress required to force faults in the seafloor to slide and expose fresh rock to seawater.

Compared with the stress applied to the seafloor by Jupiter’s gravity and by the convection of material in Europa’s underlying mantle, the rocks comprising Europa’s seafloor are at least 10 times as strong, Byrne said. “The take home message is that the seafloor is likely geologically inert.”

A second computer model also suggested that the moon’s deep magna is not capable to pushing upward into that sea, further reinforcing the first model that the sea is geological inert, lacking the heat or energy required for life.

Though unconfirmed and uncertain, these results when looked at honestly make sense. Europa is a very cold world. An underground ocean might exist due to tidal forces imposed by Jupiter, but that dark and sunless ocean is also likely to be very hostile to life. Not enough energy to sustain it.

Like the water imagined to exist at poles of the Moon, we go to Europa on the hope of finding life, even if that hope is very ephermal.

The uncertainty of science: Several different computer simulations now suggest that the underground ocean inside the Jupiter moon Europa is inert and likely harbors no existing lifeforms.

He and his colleagues constructed computer simulations of Europa’s seafloor, accounting for its gravity, the weight of the overlying ocean and the pressure of water within the seafloor itself. From the simulations, the team computed the strength of the rocks about 1 kilometer below the seafloor, or the stress required to force faults in the seafloor to slide and expose fresh rock to seawater.

Compared with the stress applied to the seafloor by Jupiter’s gravity and by the convection of material in Europa’s underlying mantle, the rocks comprising Europa’s seafloor are at least 10 times as strong, Byrne said. “The take home message is that the seafloor is likely geologically inert.”

A second computer model also suggested that the moon’s deep magna is not capable to pushing upward into that sea, further reinforcing the first model that the sea is geological inert, lacking the heat or energy required for life.

Though unconfirmed and uncertain, these results when looked at honestly make sense. Europa is a very cold world. An underground ocean might exist due to tidal forces imposed by Jupiter, but that dark and sunless ocean is also likely to be very hostile to life. Not enough energy to sustain it.

Like the water imagined to exist at poles of the Moon, we go to Europa on the hope of finding life, even if that hope is very ephermal.